In TAILab, we are focusing on theoretical and applied aspects of trustworthy machine learning:

Theoretical Research:

Our goal is to advance knowledge on understanding trust in machine learning, particularly from an information security perspective. While trustworthiness of ML algorithms spans over several theoretical research including privacy, robustness, interpretability, explainability, fairness, and governance, in TAILab, our main focus is on robustness, i.e., the ability of an ML model to maintain reliable performance when faced with natural noise, data distribution shifts, and intentional adversarial attacks. Our interests are both on (i) data-oriented robustness (statistical robustness), where we focus on information theoretical and statistical investigation of noisy data, distributional changes, uncertainty quantification, OOD detection and model calibration; and (ii) model-oriented robustness (adversarial robustness), where we investigate the fundamental limitations of a classification model when facing with input perturbations, whether the perturbation is originated form an intentional adversary or from natural phenomena with adversarial effects. Our interests in tackling adversarial robustness span from empirical methods to certified robustness. We have a special interest in applying advances made in mathematical optimization and nonconvex relaxation problems to improve ML robustness bounds in both areas.

Applied Research:

The applied aspect of our research focuses on translating ML robustness principles into trustworthy AI systems that end users can actually rely on. We have specific interests in Clinical AI. The following instances of applied research that are completed or underway with our healthcare partners demonstrate the scope of our work. We design prediction pipelines that expose and control uncertainty at the point of care: for structured data and graphs, we quantify epistemic/aleatoric uncertainty in GNNs—useful for patient-specific risk stratification and pathway modelling. For medical imaging and segmentation, we push beyond classical confidence measurement to clinically aware conformal prediction, allocating larger, morphology- or interface-sensitive prediction sets with application beyond clinical settings, including robot path planning and navigation, where uncertainty is concentrated and critical decisions hinge. In parallel, for AI applications in mental health, we evaluate and harden LLM-based clinical assistants: we probe multi-step reasoning reliability, study failure modes, and couple LLM outputs with calibrated uncertainty/verification hooks so that agentic workflows remain auditable and defer gracefully when confidence is low. Across these applications, the throughline is operational robustness: threat- and shift-aware evaluation, calibrated predictions with coverage guarantees, and lightweight certifiable components that survive real distribution shifts while meeting clinical accountability standards.

For Prospective Students:

I seek to recruit highly qualified individuals pursuing a graduate degree and a postdoc. I primarily supervise theoretical research on trust in machine learning, with emphasis on robustness. If your primary interest is purely applied work, please do not email. Competitive applicants should (i) identify a theoretical question aligned with our group’s topics—e.g., robustness–accuracy trade-offs, information-theoretic bounds, certified robustness radii, or any other related topics to our research; (ii) write ≤1 page outlining the problem, assumptions, and the technical tools they plan to use; and (iii) map that theory to a target application (clinical or otherwise) in an additional ≤1 page, explaining how the analysis or guarantees would improve reliability in practice. When you are ready, attach this research statement, your CV, complete transcripts (undergrad + graduate), and English-proficiency evidence (if applicable), to an email with the subject format as “Prospective Student: (your name) (the degree, e.g., MSc/MEng/PhD/PDF).” Everything should be sent as one or more attachments to your email and not as URLs. Due to the volume of emails only potential candidates will be contacted.

If you are currently an MEng student in our department and interested in completing a project at the intersection of Security and Machine Learning, I might be able to help you explore topics and projects that would suit your background and supervise your project. Your best chance to get involved would be taking EE8227: Secure Machine Learning course.

Statement on Equity, Diversity, and Inclusion (EDI):

TAILab Research Group is strongly committed to upholding the values of Equity, Diversity, and Inclusion (EDI). Consistent with the Tri-Agency Statement on EDI, and the Dimensions Pilot Program at Toronto Met. University, our group will foster an environment in which all will feel comfortable, safe, supported, and free to speak their minds and pursue their research interests. We recognizes that engineering culture can feel exclusionary to traditionally underrepresented groups in STEM fields. By acknowledging the EDI issues that exist in our field, we aim to validate the challenges faced by each group member, and continually strive to improve our group’s culture for all members.

TAILab Research Group Meetings:

We meet bi-weekly to discuss research topics on AI and Machine Learning Security, Privacy. Please see the meeting schedule and discussion topics here. If you are interested to attend please contact Reza Samavi.

Current Projects:

Faculty

Security & Privacy

Trustworthy Machine Learning

Safe and Secure Machine Learning

Optimization

Collaborators/Visiting Researchers

Image Segmentation Confidence Measurement

Differential Privacy

Safe AI

Mechanistic Interpretability

Adversarial Robustness

Research Students

Machine Learning Robustness

Secure Machine Learning

Optimization

Machine Learning

ML Robustness

Machine Learning

Medical AI

Trustworthy Machine Learning

Computational Lingustics

ML Robustness

LLM Confidence

Conformal Prediction

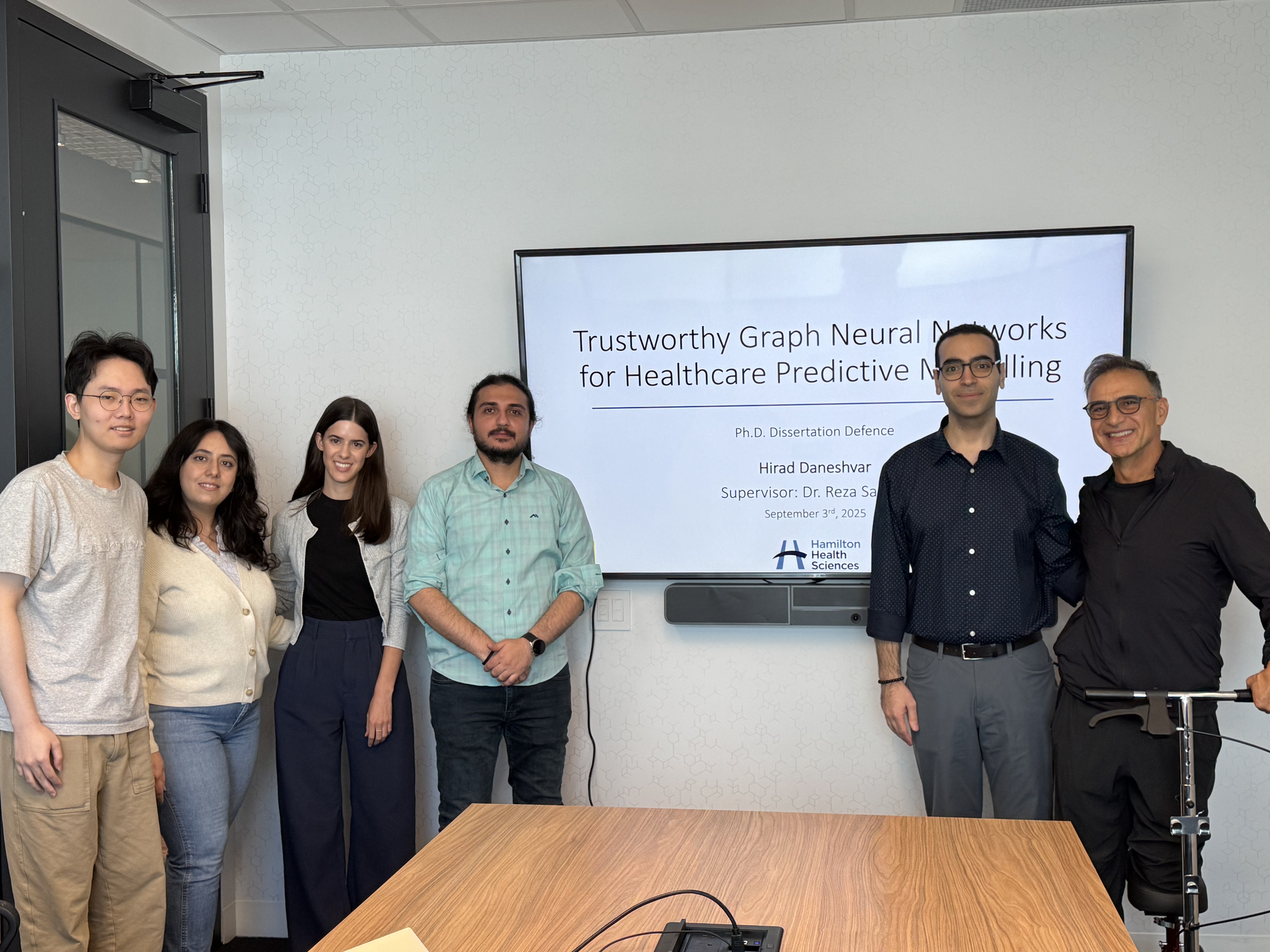

Alumni

Trustworthy GNNs

Medical AI

ML Robustness

Certified Robustness

Medical AI

LLM Privacy

Security, Privacy & Trust

Optimization

Machine Learning

Security & Privacy

Machine Learning

Blockchain

OOD Generalization and Adversarial Robustness

Medical AI

LLM Privacy

LLM Robustness

Security

Cryptography

Machine Learning

Medical AI

Machine Learning Security

Optimization

Machine Learning

Generative Adversarial Networks

Machine Learning

Medical AI

Semantic Web

Machine Learning

Social Good

Security & Privacy

Optimization

Machine Learning

Security & Privacy

Machine Learning

Social Networks

Security & Privacy

Optimization

Machine Learning

Security

Privacy